Media Effects Research Lab - Research Archive

Unveiling the Nature of Generative AI Customization: Exploring Sense of Control, User Satisfaction, and Fact-Checking Intentions

Student Researcher(s)

Hui Min Lee (Ph.D Candidate);

Peixin Hua (Ph.D Candidate);

Rehab Alayoubi (Masters Candidate);

Faculty Supervisor

INTRODUCTION

While generative AI platforms such as ChatGPT and Google Gemini are useful tools for sourcing information, they also pose risks in terms of their potential to generate misinformation. As these platforms evolve and develop, it is therefore important to understand how users interact with generative AI and process generated its content.

Customization offers a way to increase perceived control over generated responses, improving user satisfaction, but also increasing source credibility and therefore, potentially reducing fact-checking intentions of generated content. Drawing on the agency model of customization (Sundar, 2008) and the HAII-TIME model (Sundar, 2020), this study therefore investigates how customizing generative AI responses influence user satisfaction and fact-checking intentions.

RESEARCH QUESTION / HYPOTHESES

General RQ: For individuals, controlling for use frequency, familiarity, attitude, and trust toward generative AI, what is the relationship between customizing Gemini responses and satisfaction with responses and fact-checking intentions?

H1: Customizing Gemini responses will increase user satisfaction.

RQ1: What is the relationship between customizing Gemini responses and fact-checking intentions?

H2: Customizing Gemini responses will increase sense of control, which is associated with higher satisfaction.

H3: Customizing Gemini responses will increase sense of control, which is associated with higher source credibility and subsequently lower fact-checking intentions.

H4: Customizing Gemini responses will increase heuristic processing, which is associated with higher source credibility and subsequently lower fact-checking intentions.

RQ2: What is the relationship between customizing Gemini responses, systematic processing, message credibility, and fact-checking intentions?

METHOD

This study conducted an online between-subjects experiment using Google’s generative AI chatbot, Gemini (gemini.google.com). Participants (N = 194) were recruited from SONA, and they were randomly assigned to one of the three conditions (No customization vs. Voluntary customization vs. Forced customization). All participants interacted with Gemini using four assigned prompts, each containing a different controversial health question that might cause participants to suspect the presence of misinformation. Participants in the voluntary and forced conditions were instructed to “feel free to explore customization options” or to “customize” the responses respectively. After the interaction, all participants answered a series of questions measuring dependent variables.

RESULTS

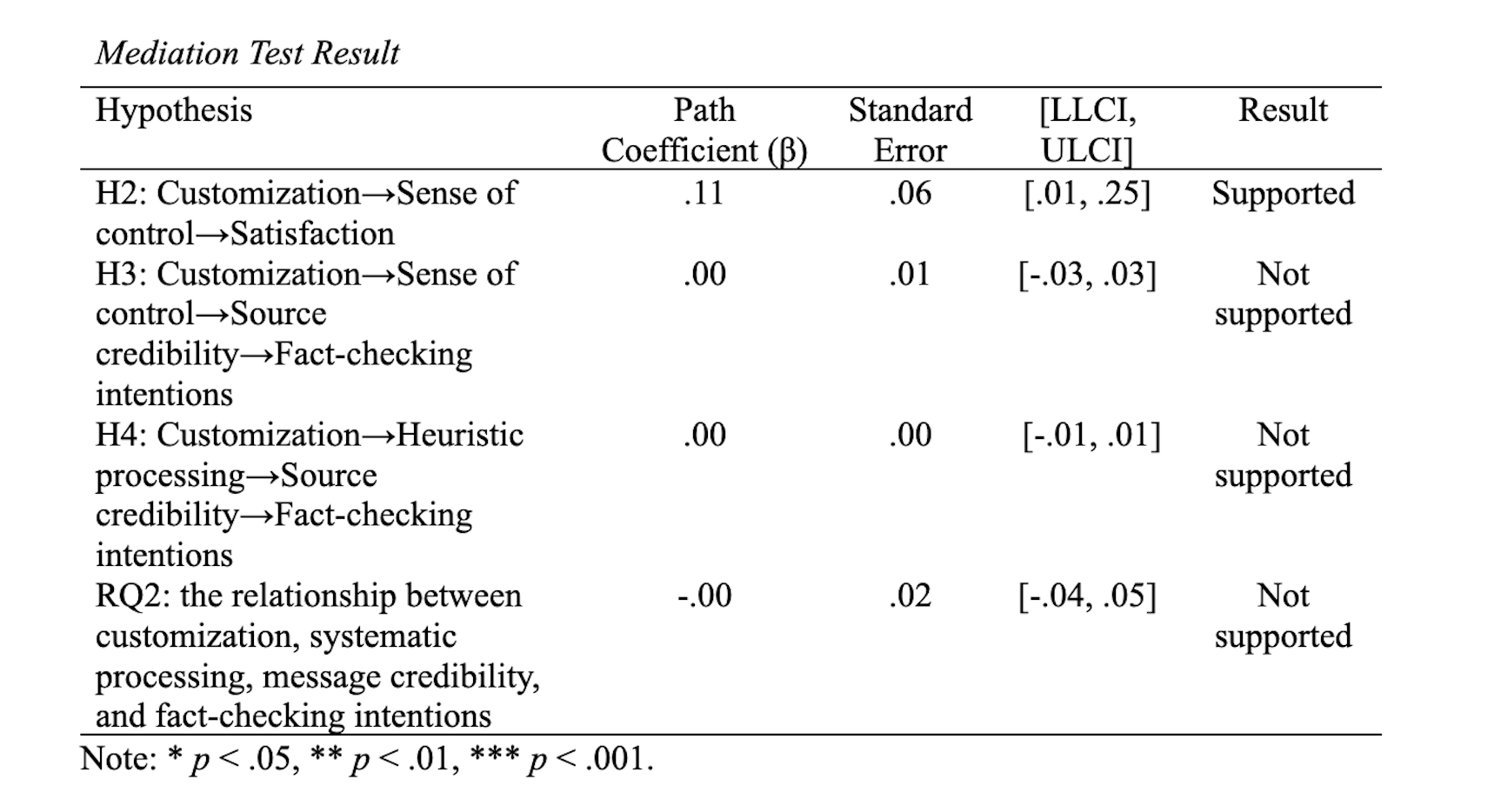

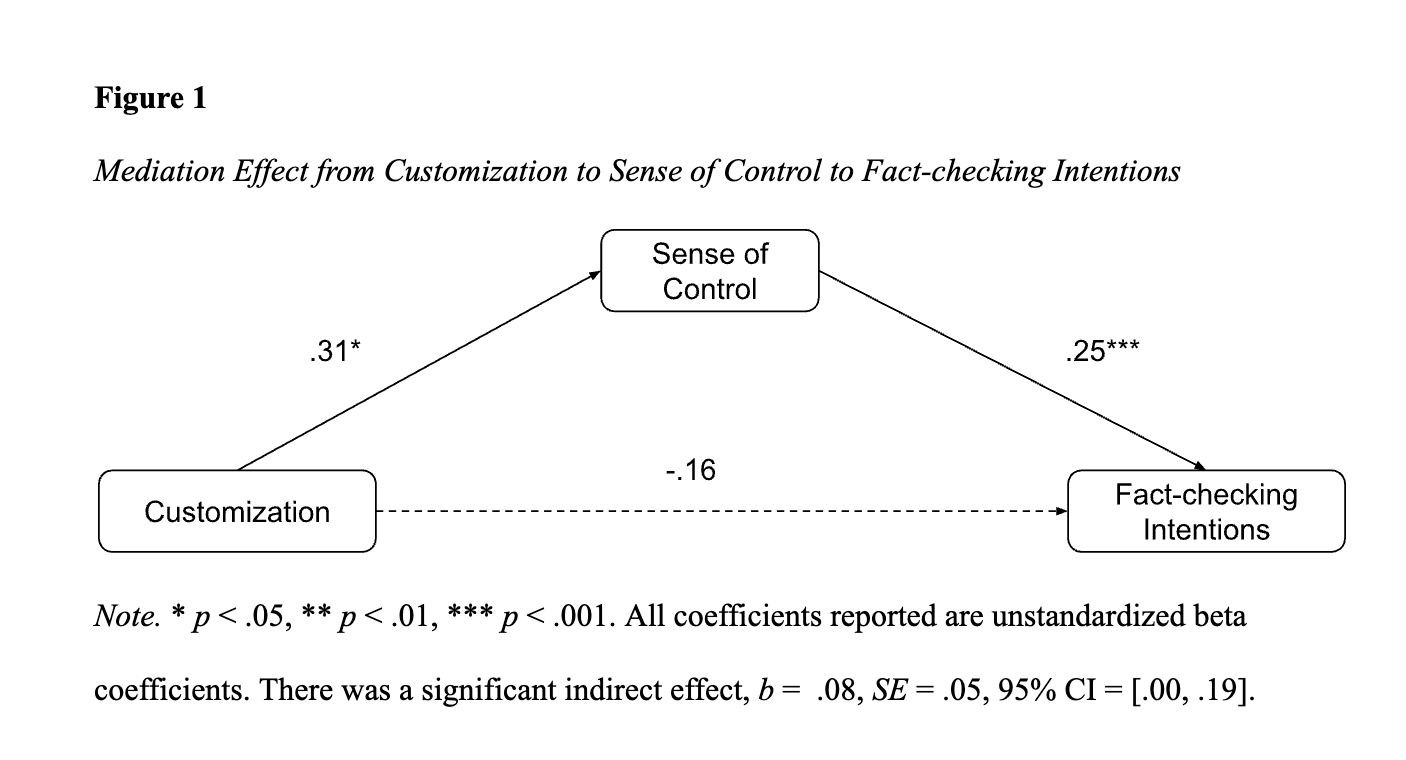

This study investigated the effects of customizing generative AI responses on outcomes such as satisfaction and fact-checking intentions. The results showed that customization increased both satisfaction and source credibility via an increased sense of control. Furthermore, while fact-checking intentions were not affected by source credibility or type of processing, the increased sense of control due to customization also increased fact-checking intentions.

Table 1

Hypothesized Mediation Effects

CONCLUSIONS/DISCUSSION

In conclusion, we found that the sense of control mediated the relationship between customization and satisfaction, source credibility, and fact-checking intentions independently, meaning that although individuals perceived Gemini to be more credible as a result of customization, the sense of control induced also empowered users to verify generated responses. This has important implications for generative AI developers, suggesting that merely customizing the style of generative AI responses may lead to socially responsible usage of generative AI. We therefore urge future studies to extend this study to other generative AI platforms and explore the effects of other forms of customization.

For more details regarding the study contact

Dr. S. Shyam Sundar by e-mail at sss12@psu.edu or by telephone at (814) 865-2173